Vulnetic AI is a high-performance hacking agent built for serious penetration testing at a fraction of typical costs.

Aikido is a security platform for code and cloud, designed to automatically find and fix vulnerabilities in one central system.

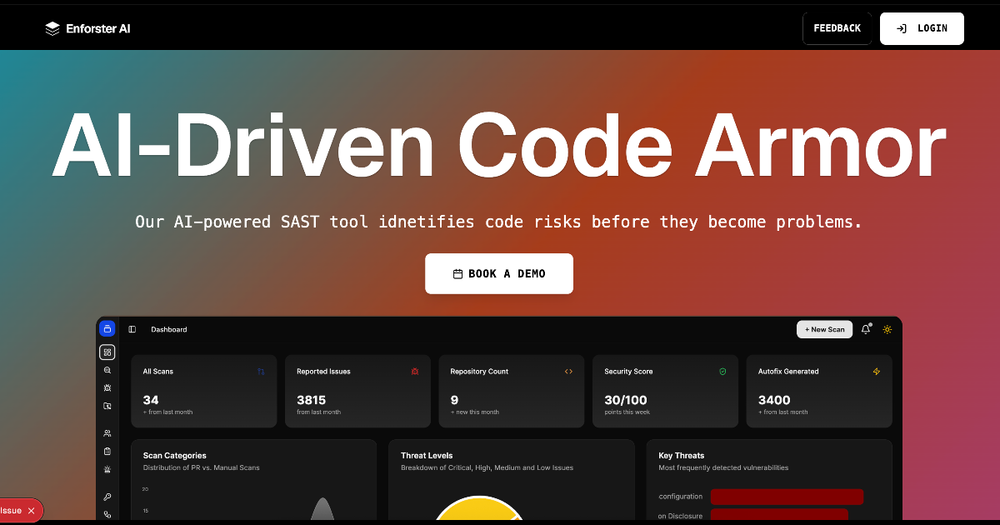

AI-native SAST tool for code security, detecting vulnerabilities, secrets, IaC issues, and AI model security with actionable AI fixes.